Week 4 labs 2-5

- Camera for Tracking – A webcam, depth camera (like Kinect or Intel RealSense), or an infrared sensor (like OpenCV with a regular camera).

- Computer with Tracking Software – You could use OpenCV (Python or C++) for motion tracking or PoseNet(JavaScript with TensorFlow.js) for body tracking.

- Projector for Light Projection – A standard projector connected to a computer, which dynamically adjusts based on the person's position.

- Processing Unit – A computer or a microcontroller like a Raspberry Pi (if using lightweight tracking).

- Software Logic – A program that:

- Captures live video feed.

- Detects the figure’s position.

- Maps that position to the projected light’s movement.

- Adjusts the projection in real time.

Possible Implementations:

- Silhouette Tracking – Projecting a white spotlight or an artistic shape around the detected figure.

- Pose-Aware Projection – Adjusting colors or shapes based on body posture.

- Interactive Shadows – Creating an inverse shadow where light moves in the opposite direction of the person.

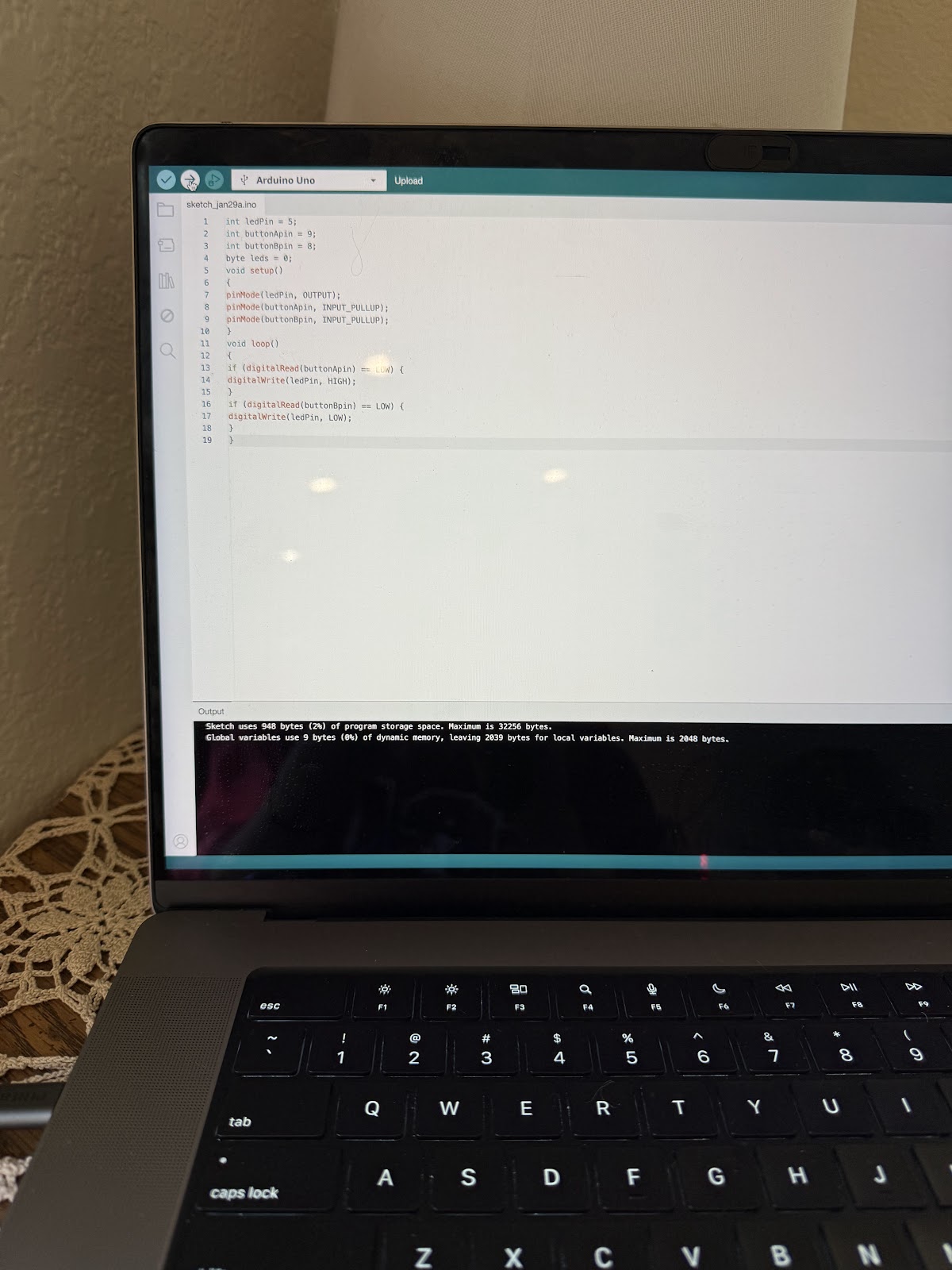

This week I worked a lot to catch up on labs. Finishing them through lab 5. I caught on so much more after this week. The week prior I was so confused reading the labs and couldn't follow along, but I am in a much better flow now.

Lab 2

These were most of the videos and photos I collected. I faced some issues only because I was not connecting the wires to the correct spots. Giving my coordinates of 0 instead of higher numbers.

Comments

Post a Comment